At AI Infrastructure Field Day 2, Solidigm provided comprehensive insights about its enterprise Solid State Drive (SSD) product line, research & development efforts, and how this fits into a cohesive strategy that aims to enable resilient and performant storage for AI workloads operating at scale.

Who is Solidigm?

Although the name might sound unfamiliar, the company, fully owned by South Korean semiconductor manufacturer SK Hynix, has a rich history and is in fact Intel’s former NAND business. Osmium Data Group also covered Solidigm during their AIIFD1 presentation, with insights around how to tackle AI Data Infrastructure energy challenges.

The company focuses on enterprise-grade SSDs, available in three series:

- D7 – NVMe-based, high-performance SSDs optimized for intensive workloads. These are mostly based on TLC1 3D NAND SSDs, including PCIe Gen-5 connectivity for the latest models. High-endurance options available on an SLC2-based system with up to 50 DWPD3.

- D5 – NVMe-based, read-intensive QLC4 3D NAND SSDs with guaranteed durability and extreme density. These are also suitable for mainstream workloads.

- D3 – SATA-based SSDs design to lower TCO and extend longevity of SATA systems while providing better storage performance

Fun fact: the nerds in the crowd5 who remember Intel’s enterprise SSD product line may recall that Chipzilla followed exactly the same naming convention.

Putting the D3 Series aside (available in 2.5-in, also known as U.2 form factor), the D5 and D7 Series are available in a variety of modern EDSFF form factors (scroll down on that page for the full pitch).

With that, what did Solidigm talk about at AIIFD2, and what should we make of it?

Old News In Storage: Data is Growing(tm)

This article’s author had the kindness to spare the reader with the typical introductory topics around “unstoppable data growth”, “exploding storage needs”, and other hyperboles. As a new day starts, the moon sinks, the sun rises, and data is growing.

Sarcasm aside, organizations must maintain balance between contradictory needs while enabling business requirements and accelerating time to market. On one hand, seemingly infinite storage needs. On the other hand very finite power, space, and cost limits.

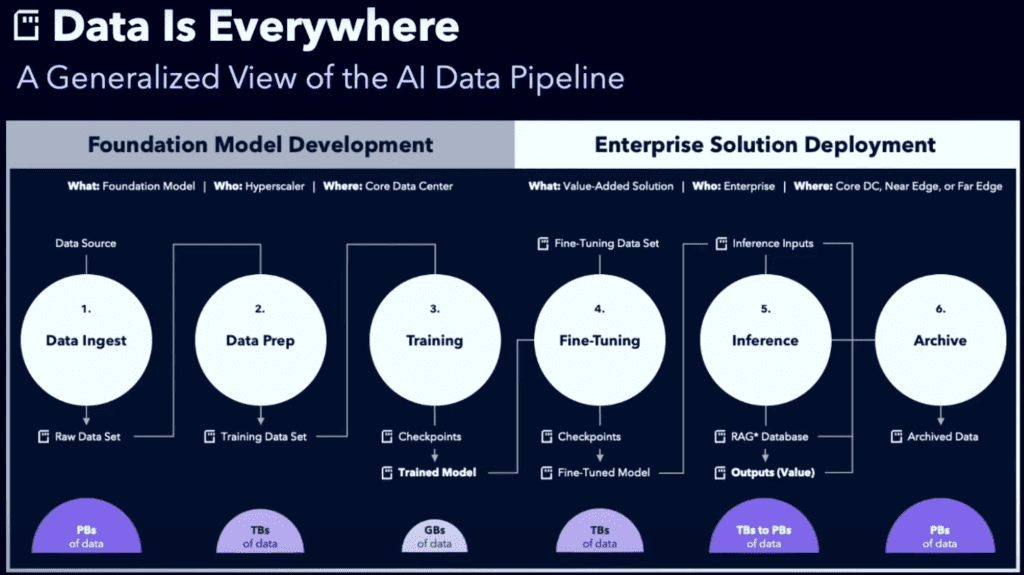

This is particularly true in the AI field, where data needs are taken to the next level. Looking for example at LLMs6, not all organizations need to do model training, with the massive dataset requirements this implies. They may, however, need to fine-tune models to meet their own specific business requirements.

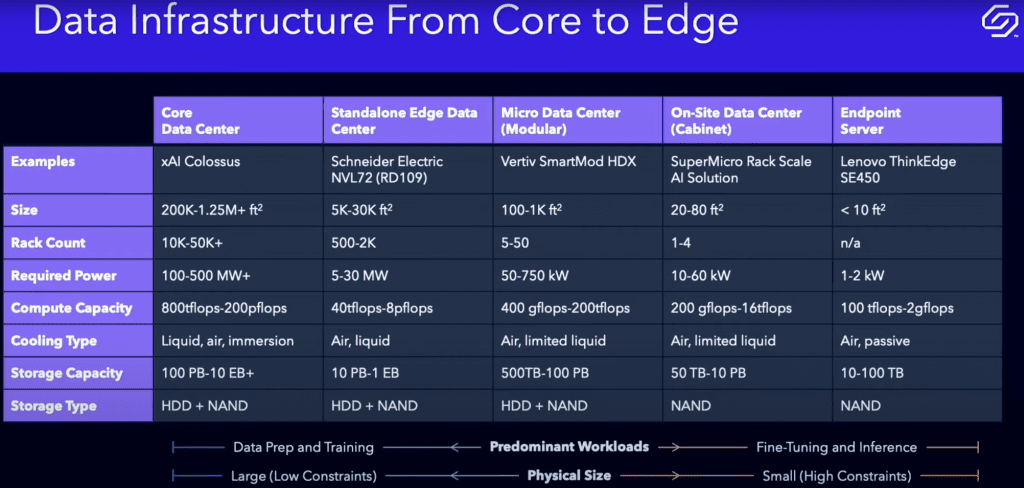

At each stage, different kind of servers are required, with different specs, and of course different storage workload characteristics & patterns. Even within similar workload categories (at the bottom of Figure 2), different workload types emerge.

What is interesting here is to look at Predominant Workloads / Physical size at the bottom, towards the right, specifically On-Site Data Center. At this scale, it’s worth looking at size, rack count, power consumption, and capacity.

Clearly, better efficiencies can be achieved by using capacity-dense SSDs: better capacity per storage node (and thus per rack) can be achieved. This leads to reduced power consumption at the storage level, and at this point the organization can either decide to reduce their physical footprint, or to keep its server footprint intact and significantly increase capacity. And obviously, this does not take into account extra data archival requirements.

122TB – The New Frontier

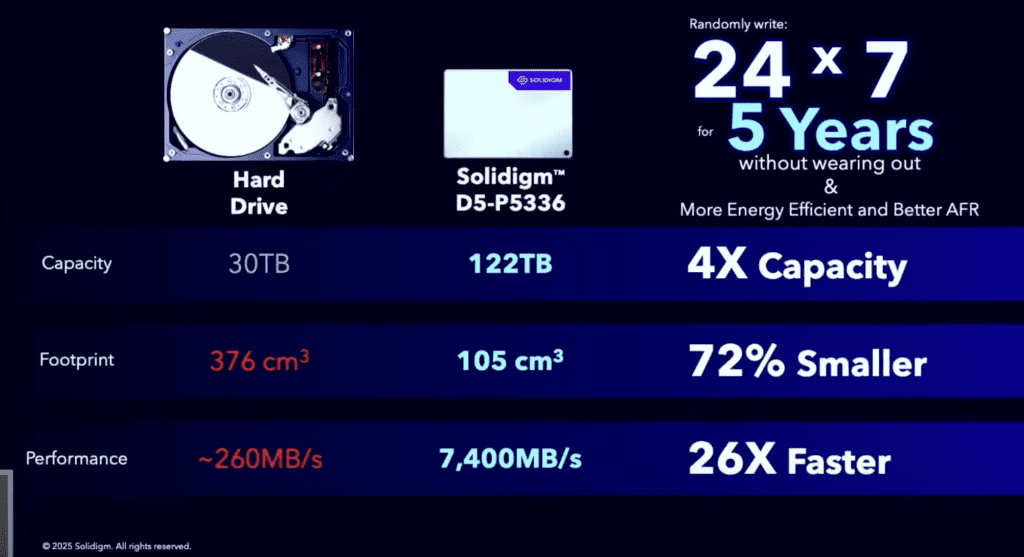

Enter the P5-D5536 NVMe QLC 3D NAND drive, sporting a massive 122 TB of capacity. The company showcases this drive as being specifically engineered for guaranteed durability: it is designed to support 24/7 random writes over a period of 5 years, backed by warranty.

Although Solidigm is not the first storage vendor to break the 100 TB (yes, TB as in terabytes – and no, not TiB / tebibytes) barrier in a single form factor, it is the first company to deliver 122 TB in a standard form factor, opening the door to massive commoditization of large capacity QLC 3D NAND SDDs.

Those capacity-dense drives allow massive capacity consolidation, resulting in significant datacenter real estate gains and better energy efficiency, as seen in the screenshot below.

Although it seems super easy to pick on HDDs, it’s worth recalling to the reader that most hyperscaler environments rely on HDDs. The economies of scale can be staggering when envisioning the footprint of such environments. This relates nicely with the values shown in Figure 2 and highlighted in the previous section.

Commoditize or Specialize?

But hold on, Osmium folks! Isn’t a famous flash array manufacturer already offering 150 TB flash modules on their proprietary architecture, with rumors talking of doubling this capacity in 2025 and further increasing beyond?

Yes, that is technically correct, but let’s also remember that this vendor recently released (Q1-2025) a software-defined architecture of their system, co-engineered with a well-known hyperscaler.

While this architecture is specifically designed for hyperscalers and high-performance workloads, it relies on a model where the storage vendor brings its architecture and fully-fledged, comprehensive data services stack, while the hyperscaler brings its own storage nodes and commodity components. Who says the hyperscaler couldn’t use D5-P5536 SSDs, at least on data nodes?

And yes, it’s true that this vendor has often insisted on its ability to offer better flash usage through distributed flash translation layer (FTL), but the architecture presented (and scale / cost necessities) doesn’t make metadata and data disaggregation a challenge from an FTL / SSD internals optimization perspective.

Addendum 16-Jun-25 – on the form factor, Osmium Data Group industry friend & storage veteran Howard Marks notes:

- “DFMs fit in the slot pure used to mount 2 U.2 SSDs to so they’re a lot physically larger (almost 4X the volume) of U.2 or E1.S. More capacity in more space is less impressive.”

- “The 150 (TB) is the total amount of flash, since their FTL manages it. 122 (TB) is net useable after overhead and overprovisioning (128 (TB) raw)”

Those notes confirm the impressive feat regarding storage density in a constrained space / form factor.

At the same time, Solidigm announced a partnership with Dell Technologies on the PowerScale scale-out flash storage systems, specifically the F910 and F710 Series. Those systems are seeing their capacity double, reaching almost 6 PB effective capacity per node.

AI and Chill

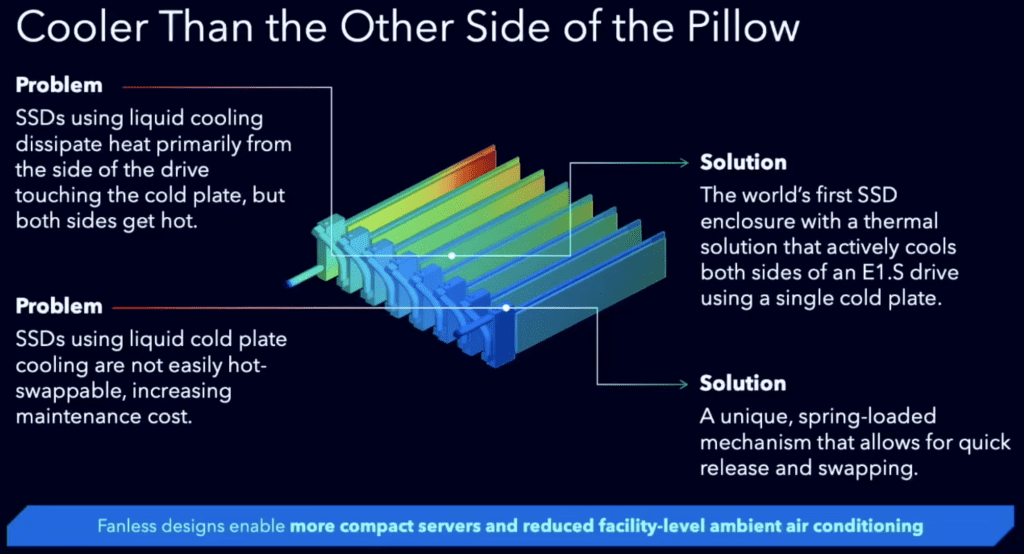

One last interesting note from Solidigm was the introduction of a new method to cool SSDs. The company introduced a new thermal solution, specifically a liquid-cooled SSD enclosure to improve heat dissipation on AI and HPC servers.

The showcased prototype seemed interesting and is designed to actively cool E1.S drives, by combining liquid cooling with an aluminum plate (a Jony Ive rejoices somewhere in the world), a solution that reduces power consumption by eliminating the need to run fans at full speed.

The Osmium Perspective

Solidigm’s presentation at AI Infrastructure 2 was well structured and clearly demonstrated the company’s expertise in flash design.

What stood out for Osmium Data Group was a profound understanding of the AI model development cycle and the specific workload patterns stemming during each stage, backed with typical hardware configurations and storage profile requirements.

Another relevant point to note is the commodity aspect of Solidigm designs, which rely on EDSFF form factors. This plays a significant role in standardizing the use of such massive SSDs across a broad range of storage platforms.

Some partnerships were announced, notably with Dell Technologies, but hyperscalers like to build their own designs and may instead source these SSDs and build their own custom stack – or partner with storage vendors looking to find common ground between commoditization and software-based innovation.

Osmium has always had a weak spot for SSDs and all things storage / flash related; it was therefore refreshing to see the continued pace of innovation at Solidigm.

Resources

The AIIFD2 Solidigm videos with a high-level summary are available here, as well as on YouTube:

- Triple-level cell (3 bits per memory cell) ↩︎

- Single-level cell – very durable, but 3x less dense than TLC systems and 4x less dense than QLC systems ↩︎

- Drive Writes Per Day, a drive endurance measurement standard set by the JEDEC. Find out more on Wikipedia. ↩︎

- Quad-level cell (4 bits per memory cell) ↩︎

- This could have been “The Intel Insiders” – a fitting dad joke indeed ↩︎

- Large Language Models ↩︎